Your project can be on or off. Your project's priority can be changed. Your job can be changed. But the technology is always heading north!

Thursday, February 16, 2017

My co-worker Shawn's blogs for Hadoop machine setup and installation

http://wp.huangshiyang.com/env-set-up-centos

Solving protobuf version conflicting in your Java applications

Due to the bad design of protobuf, we faced the version conflicting from time to time. Finally, we figured out a way to solve this issue using a routine way.

Assume:

1. Using Maven, you have found all dependency jar files.

2. These jar files contains different versions of protobuf so your application fails to work. For example, MapMaker.keyEquivalence method is not found.

3. You have Java de-compiler installed.

Solution:

1. Rename Maven repository (for example, rename .m2\repository to .m2\repository_backup)

2. Re-generate Maven repository (mvn test or mvn package on pom.xml file)

3. Under Windows Explorer, go to .m2\repository folder and type '.jar' from search box (top right corner).

4. Now, you get all jar files under the same window.

5. Copy all and paste them into a single folder (i.e. c:\my_jars).

6. Move these jar files into Linux machine since we will need some Linux scripting there.

7. Assume all of these jar files are under your Linux machine now under subdirectory /tmp/myJars

8. Do following scripting(assume you have Java JDK installed so you have jar somewhere under your Linux system; otherwise, install JDK first):

[root@john1 myJars]# for x in `ls`; do jar -tvf $x | grep com.google.common.collect.MapMaker; done

9. Above will find all jar files which contains class MapMaker.

10. Under my case, it found following two jar files:

google-collections-1.0.jar

1479 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$1.class

1756 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$ComputationExceptionReference.class

2041 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedSoftEntry.class

2055 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedStrongEntry.class

11. Use Java decompiler to search 'keyEquivalence' and you will find only guava-14.0.1.jar contains it.

12. Remove google-collections-1.0.jar from your classpath. Now, there is no complain about method MapMaker.keyEquivalence is not found or similar errors.

Assume:

1. Using Maven, you have found all dependency jar files.

2. These jar files contains different versions of protobuf so your application fails to work. For example, MapMaker.keyEquivalence method is not found.

3. You have Java de-compiler installed.

Solution:

1. Rename Maven repository (for example, rename .m2\repository to .m2\repository_backup)

2. Re-generate Maven repository (mvn test or mvn package on pom.xml file)

3. Under Windows Explorer, go to .m2\repository folder and type '.jar' from search box (top right corner).

4. Now, you get all jar files under the same window.

5. Copy all and paste them into a single folder (i.e. c:\my_jars).

6. Move these jar files into Linux machine since we will need some Linux scripting there.

7. Assume all of these jar files are under your Linux machine now under subdirectory /tmp/myJars

8. Do following scripting(assume you have Java JDK installed so you have jar somewhere under your Linux system; otherwise, install JDK first):

[root@john1 myJars]# for x in `ls`; do jar -tvf $x | grep com.google.common.collect.MapMaker; done

9. Above will find all jar files which contains class MapMaker.

10. Under my case, it found following two jar files:

google-collections-1.0.jar

1479 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$1.class

1756 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$ComputationExceptionReference.class

2041 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedSoftEntry.class

2055 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedStrongEntry.class

guava-14.0.1.jar

227 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$1.class

2329 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$ComputingMapAdapter.class

2545 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$NullComputingConcurrentMap.class

4134 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$NullConcurrentMap.class

12. Remove google-collections-1.0.jar from your classpath. Now, there is no complain about method MapMaker.keyEquivalence is not found or similar errors.

Tuesday, February 14, 2017

Hadoop Proxy user - Superusers Acting On Behalf Of Other Users

https://hadoop.apache.org/docs/r2.7.2/hadoop-project-dist/hadoop-common/Superusers.html

Wednesday, February 8, 2017

High performance regex open source packages

Java library to check for multiple regexp with a single deterministic automaton. Just a wrapper around dk.brics.automaton:

https://github.com/fulmicoton/multiregexp

RE2/J: linear time regular expression matching in Java:

https://github.com/google/re2j

Monday, February 6, 2017

My first scala from Spark system: word count

[root@john1 john]# cd /var/lib/hadoop-hdfs

[root@john1 hadoop-hdfs]# sudo -u hdfs spark-shell --master yarn --deploy-mode client

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc (master = yarn-client, app id = application_1486166793688_0005).

17/02/06 16:13:53 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.1.0

17/02/06 16:13:54 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

SQL context available as sqlContext.

scala> val logFile = "hdfs://john1.dg:8020/johnz/text/test.txt"

logFile: String = hdfs://john1.dg:8020/johnz/text/test.txt

scala> val file = sc.textFile(logFile)

file: org.apache.spark.rdd.RDD[String] = hdfs://john1.dg:8020/johnz/text/test.txt MapPartitionsRDD[1] at textFile at <console>:29

scala> val counts = file.flatMap(_.split(" ")).map(word => (word,1)).reduceByKey(_ + _)

counts: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:31

scala> counts.collect()

res0: Array[(String, Int)] = Array((executes,1), (is,1), (expressive,1), (real,1), ((clustered),1), ("",1), (apache,1), (computing,1), (fast,,1), (job,1), (environment.,1), (spark,1), (a,1), (in,1), (which,1), (extremely,1), (distributed,1), (time,1), (and,1), (system,1))

[root@john1 hadoop-hdfs]# sudo -u hdfs spark-shell --master yarn --deploy-mode client

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc (master = yarn-client, app id = application_1486166793688_0005).

17/02/06 16:13:53 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.1.0

17/02/06 16:13:54 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

SQL context available as sqlContext.

scala> val logFile = "hdfs://john1.dg:8020/johnz/text/test.txt"

logFile: String = hdfs://john1.dg:8020/johnz/text/test.txt

scala> val file = sc.textFile(logFile)

file: org.apache.spark.rdd.RDD[String] = hdfs://john1.dg:8020/johnz/text/test.txt MapPartitionsRDD[1] at textFile at <console>:29

scala> val counts = file.flatMap(_.split(" ")).map(word => (word,1)).reduceByKey(_ + _)

counts: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:31

scala> counts.collect()

res0: Array[(String, Int)] = Array((executes,1), (is,1), (expressive,1), (real,1), ((clustered),1), ("",1), (apache,1), (computing,1), (fast,,1), (job,1), (environment.,1), (spark,1), (a,1), (in,1), (which,1), (extremely,1), (distributed,1), (time,1), (and,1), (system,1))

Thursday, February 2, 2017

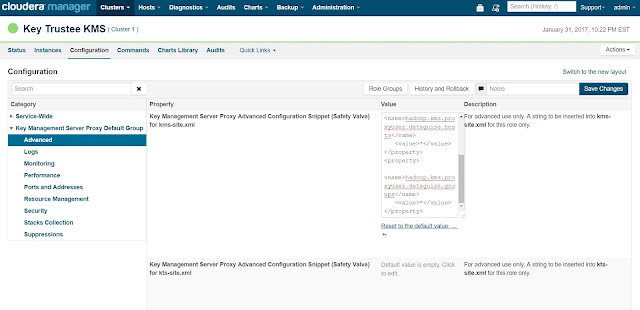

Solve KMS authentication issue under CDH5 HA environment

2017-01-31 21:53:41,538 WARN org.apache.hadoop.security.token.delegation.web.DelegationTokenAuthenticationFilter: Authentication exception: User: testuser@DG.COM is not allowed to impersonate testuser

When above log is found in KMS log file(/var/log/kms-keytrustee/kms.log), add below properties in the KMS configuration from the cloudera manager (Cloudera Manager > Key Trustee KMS > Configuration > Key Management Server Proxy Default Group > Advanced) and then we were able to submit the jobs on the cluster using testuser user:-

<property>

<name>hadoop.kms.proxyuser.testuser.users</name>

<value>*</value>

</property>

<property>

<name>hadoop.kms.proxyuser.testuser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.kms.proxyuser.testuser.groups</name>

<value>*</value>

</property>

On CDH5.8.4, the UI is changed and we have to put the content of such xml property into their new UI.

Subscribe to:

Comments (Atom)