java -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.port=18745 -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=192.168.6.81 -Xrunjdwp:transport=dt_socket,address=8002,server=y,suspend=n -jar "xxxxAgent-jetty.jar"

Then from remote machine, run jconsole and put above ip and port into jconsole GUI.

Your project can be on or off. Your project's priority can be changed. Your job can be changed. But the technology is always heading north!

Tuesday, November 7, 2017

Tuesday, August 1, 2017

log4j trick when creating ConsoleAppender from Java code

Right way to do it:

/**

* Dynamically initialize a log4j console appender so we can log info to stdout

* with specified level and pattern layout.

*/

public static void initStdoutLogging(Level logLevel, String patternLayout) {

LogManager.resetConfiguration();

ConsoleAppender appender = new ConsoleAppender(new PatternLayout(patternLayout));

appender.setThreshold(logLevel);

LogManager.getRootLogger().addAppender(appender);

}

To read logs from executor, you will have to use something like:

sudo -u hdfs yarn logs -applicationId application_1501527690446_0066

/**

* Dynamically initialize a log4j console appender so we can log info to stdout

* with specified level and pattern layout.

*/

public static void initStdoutLogging(Level logLevel, String patternLayout) {

LogManager.resetConfiguration();

ConsoleAppender appender = new ConsoleAppender(new PatternLayout(patternLayout));

appender.setThreshold(logLevel);

LogManager.getRootLogger().addAppender(appender);

}

initStdoutLogging(Level.INFO, PatternLayout.TTCC_CONVERSION_PATTERN);

When enabling logging for Spark, we need to call above initStdoutLogging twice: one for the driver and another for the executor. Otherwise, you can only see logs from driver.

To read logs from executor, you will have to use something like:

sudo -u hdfs yarn logs -applicationId application_1501527690446_0066

Analysis:

If I initialize it like this:

ConsoleAppender ca = new ConsoleAppender();

ca.setLayout(new PatternLayout(PatternLayout.TTCC_CONVERSION_PATTERN));

it gives an error and breaks the logging.

Error output:

log4j:ERROR No output stream or file set for the appender named [null].

If I initialize it like this it works fine:

ConsoleAppender ca = new ConsoleAppender(new PatternLayout(PatternLayout.TTCC_CONVERSION_PATTERN));

The reason:

If you look at the source for ConsoleAppender:

public ConsoleAppender(Layout layout) {

this(layout, SYSTEM_OUT);

}

public ConsoleAppender(Layout layout, String target) {

setLayout(layout);

setTarget(target);

activateOptions();

}

You can see that

ConsoleAppender(Layout) passes SYSTEM_OUT as the target, and also that it calls activateOptions after setting the layout and target.

If you use

setLayout yourself, then you'll also need to explicitly set the target and call activateOptions.Monday, June 12, 2017

JavaOptions to pass to Spark driver and Snappy native library to pass to Java program

/usr/hdp

JavaOptions to pass to Spark driver: Here is a Hortonworks example:

JavaOptions=-Dhdp.version=2.4.2.0-258 -Dspark.driver.extraJavaOptions=-Dhdp.version=2.4.2.0-258

Snappy native library to pass to Java program. Here is an example for CDH5:

1. Copy /opt/cloudera/parcels/CDH-5.5.1-1.cdh5.5.1.p0.11/lib/hadoop/lib/native/* to your java program machine and put them to any directory such as /opt/native

2. Run your Java program with following Java options:

JavaOptions=-Djava.library.path=/opt/native

JavaOptions to pass to Spark driver: Here is a Hortonworks example:

JavaOptions=-Dhdp.version=2.4.2.0-258 -Dspark.driver.extraJavaOptions=-Dhdp.version=2.4.2.0-258

Snappy native library to pass to Java program. Here is an example for CDH5:

1. Copy /opt/cloudera/parcels/CDH-5.5.1-1.cdh5.5.1.p0.11/lib/hadoop/lib/native/* to your java program machine and put them to any directory such as /opt/native

2. Run your Java program with following Java options:

JavaOptions=-Djava.library.path=/opt/native

Wednesday, May 10, 2017

Search anything from a bugzilla bug given a range of bug numbers

[root@centos my-data-set]# for ((i=13420;i<13500;i++)); do echo $i >> /tmp/search_result.txt; curl http://192.168.5.105/bugzilla/show_bug.cgi?id=$i | grep "passed to DE constructor may be null" >> /tmp/search_result.txt; done;

Tuesday, May 2, 2017

A simple way to clone 10,000 files to cluster

# Generate 10,000 files from one seed and put them into 100 subdirectories

[hdfs@cdh1 tmp]$ for((i=1;i<=100;i++));do mkdir -p 10000files/F$i; for((j=1;j<=100;j++));do echo $i-$j; cp 1000line.csv 10000files/F$i/$i-$j.csv;done;done;

# Move them to cluster. One sub-directory at one time.

[hdfs@cdh1 tmp]$ for((i=1;i<=100;i++));do echo $i; hadoop fs -mkdir /JohnZ/10000files/F$i; hadoop fs -copyFromLocal 10000files/F$i/* /JohnZ/10000files/F$i/.;done;

Tuesday, April 25, 2017

Prepare Python machine learning environment on Centos 6.6 to train data

# major points: 1. Has to use Python 2.7, not 2.6. But Centos 6.6 uses Python 2.6 for OS so upgrading to 2.7 is not a solution. Need to install Python 2.7 in addition to 2.6. 2. Use setuptool to install pip and use pip to install rest.

# Download dependency files

yum groupinstall "Development tools"

yum -y install gcc gcc-c++ numpy python-devel scipy

yum install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel

# Compile and install Python 2.7.13

wget https://www.python.org/ftp/python/2.7.13/Python-2.7.13.tgz

tar xzf Python-2.7.13.tgz

cd Python-2.7.13

./configure

# make altinstall is used to prevent replacing the default python binary file /usr/bin/python.

make altinstall

# Download setuptools using wget:

wget --no-check-certificate https://pypi.python.org/packages/source/s/setuptools/setuptools-1.4.2.tar.gz

# Extract the files from the archive:

tar -xvf setuptools-1.4.2.tar.gz

# Enter the extracted directory:

cd setuptools-1.4.2

# Install setuptools using the Python we've installed (2.7.6)

# python2.7 setup.py install

/opt/python-2.7.13/Python-2.7.13/python ./setup.py install

# install pip

curl https://raw.githubusercontent.com/pypa/pip/master/contrib/get-pip.py | python2.7 -

or (following works for me)

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python ./setuptools/setuptools-1.4.2/easy_install.py pip

# install numpy

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install numpy

# Install SciPy

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install scipy

# Install Scikit

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install scikit-learn

# Install nltk

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install nltk

# Download nltk data (will be stored under /root/nltk_data)

[root@centos SVM]# /opt/python-2.7.13/Python-2.7.13/python -m nltk.downloader all

# Download dependency files

yum groupinstall "Development tools"

yum -y install gcc gcc-c++ numpy python-devel scipy

yum install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel

wget https://www.python.org/ftp/python/2.7.13/Python-2.7.13.tgz

tar xzf Python-2.7.13.tgz

cd Python-2.7.13

./configure

# make altinstall is used to prevent replacing the default python binary file /usr/bin/python.

make altinstall

# Download setuptools using wget:

wget --no-check-certificate https://pypi.python.org/packages/source/s/setuptools/setuptools-1.4.2.tar.gz

# Extract the files from the archive:

tar -xvf setuptools-1.4.2.tar.gz

# Enter the extracted directory:

cd setuptools-1.4.2

# Install setuptools using the Python we've installed (2.7.6)

# python2.7 setup.py install

/opt/python-2.7.13/Python-2.7.13/python ./setup.py install

# install pip

curl https://raw.githubusercontent.com/pypa/pip/master/contrib/get-pip.py | python2.7 -

or (following works for me)

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python ./setuptools/setuptools-1.4.2/easy_install.py pip

# install numpy

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install numpy

# Install SciPy

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install scipy

# Install Scikit

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install scikit-learn

# Install nltk

[root@centos python-2.7.13]# /opt/python-2.7.13/Python-2.7.13/python -m pip install nltk

# Download nltk data (will be stored under /root/nltk_data)

[root@centos SVM]# /opt/python-2.7.13/Python-2.7.13/python -m nltk.downloader all

Tuesday, March 21, 2017

Mapreduce logs have weird behavior on HDP 2.3 Tez

When launching map reduce on Tez, we

will not see our logs from HDP UI. Click

‘History’ and got nothing although we can see Hadoop system logs.

But if you use following command, you will get map reduce logs

from system stdout:

sudo -u hdfs yarn logs -applicationId

application_1490133530166_0002

But the format is modified:

2017-03-21 15:04:24,708 [ERROR]

[TezChild] |common.FindAndExitMapRunner|: caught throwable when run mapper:

java.lang.UnsupportedOperationException: Input only available on map

The ‘source’ is changed to ‘TezChild’.

Our package name is truncated to only

have last part so our Java class name is not full name anymore. On this

example, “com.xxx.hadoop.common.FindAndExitMapRunner” is changed to “common.FindAndExitMapRunner”

To be

compare with normal log (i.e. without Tez), here is what we should have from map reduce log (you

see full package name and class name):

2017-03-20 14:29:54,778 INFO [main]

com.xxx.hadoop.common.ColumnMap: Columnar Mapper

Important! Important! Important! ---->

To review the log, you have to use exact user who launched such application: sudo -u hdfs"

Otherwise, you will see following error:

"Log aggregation has not completed or is not enabled."

Important! Important! Important! ---->

To review the log, you have to use exact user who launched such application: sudo -u hdfs"

Otherwise, you will see following error:

"Log aggregation has not completed or is not enabled."

Monday, March 6, 2017

Additional jar files when running Spark under Hadoop YARN mode (CDH 5.10.0 with Scala 2.10 and Spark 1.6.0)

lrwxrwxrwx 1 root root 91 Feb 23 15:02 spark-core_2.10-1.6.0-cdh5.10.0.jar -> /opt/cloudera/parcels/CDH-5.10.0-1.cdh5.10.0.p0.41/jars/spark-core_2.10-1.6.0-cdh5.10.0.jar

lrwxrwxrwx 1 root root 80 Feb 23 15:15 scala-library-2.10.6.jar -> /opt/cloudera/parcels/CDH-5.10.0-1.cdh5.10.0.p0.41/jars/scala-library-2.10.6.jar

lrwxrwxrwx 1 root root 37 Mar 6 14:06 commons-lang3-3.3.2.jar -> ../../../jars/commons-lang3-3.3.2.jar

-rw-r--r-- 1 root root 185676 Mar 6 14:11 typesafe-config-2.10.1.jar

lrwxrwxrwx 1 root root 55 Mar 6 14:26 akka-actor_2.10-2.2.3-shaded-protobuf.jar -> ../../../jars/akka-actor_2.10-2.2.3-shaded-protobuf.jar

lrwxrwxrwx 1 root root 56 Mar 6 14:28 akka-remote_2.10-2.2.3-shaded-protobuf.jar -> ../../../jars/akka-remote_2.10-2.2.3-shaded-protobuf.jar

lrwxrwxrwx 1 root root 55 Mar 6 14:29 akka-slf4j_2.10-2.2.3-shaded-protobuf.jar -> ../../../jars/akka-slf4j_2.10-2.2.3-shaded-protobuf.jar

lrwxrwxrwx 1 root root 70 Mar 6 14:40 spark-assembly-1.6.0-cdh5.10.0-hadoop2.6.0-cdh5.10.0.jar -> ../../../jars/spark-assembly-1.6.0-cdh5.10.0-hadoop2.6.0-cdh5.10.0.jar

[root@john2 lib]# pwd

/opt/cloudera/parcels/CDH-5.10.0-1.cdh5.10.0.p0.41/lib/hadoop-yarn/lib

Thursday, February 16, 2017

My co-worker Shawn's blogs for Hadoop machine setup and installation

http://wp.huangshiyang.com/env-set-up-centos

Solving protobuf version conflicting in your Java applications

Due to the bad design of protobuf, we faced the version conflicting from time to time. Finally, we figured out a way to solve this issue using a routine way.

Assume:

1. Using Maven, you have found all dependency jar files.

2. These jar files contains different versions of protobuf so your application fails to work. For example, MapMaker.keyEquivalence method is not found.

3. You have Java de-compiler installed.

Solution:

1. Rename Maven repository (for example, rename .m2\repository to .m2\repository_backup)

2. Re-generate Maven repository (mvn test or mvn package on pom.xml file)

3. Under Windows Explorer, go to .m2\repository folder and type '.jar' from search box (top right corner).

4. Now, you get all jar files under the same window.

5. Copy all and paste them into a single folder (i.e. c:\my_jars).

6. Move these jar files into Linux machine since we will need some Linux scripting there.

7. Assume all of these jar files are under your Linux machine now under subdirectory /tmp/myJars

8. Do following scripting(assume you have Java JDK installed so you have jar somewhere under your Linux system; otherwise, install JDK first):

[root@john1 myJars]# for x in `ls`; do jar -tvf $x | grep com.google.common.collect.MapMaker; done

9. Above will find all jar files which contains class MapMaker.

10. Under my case, it found following two jar files:

google-collections-1.0.jar

1479 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$1.class

1756 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$ComputationExceptionReference.class

2041 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedSoftEntry.class

2055 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedStrongEntry.class

11. Use Java decompiler to search 'keyEquivalence' and you will find only guava-14.0.1.jar contains it.

12. Remove google-collections-1.0.jar from your classpath. Now, there is no complain about method MapMaker.keyEquivalence is not found or similar errors.

Assume:

1. Using Maven, you have found all dependency jar files.

2. These jar files contains different versions of protobuf so your application fails to work. For example, MapMaker.keyEquivalence method is not found.

3. You have Java de-compiler installed.

Solution:

1. Rename Maven repository (for example, rename .m2\repository to .m2\repository_backup)

2. Re-generate Maven repository (mvn test or mvn package on pom.xml file)

3. Under Windows Explorer, go to .m2\repository folder and type '.jar' from search box (top right corner).

4. Now, you get all jar files under the same window.

5. Copy all and paste them into a single folder (i.e. c:\my_jars).

6. Move these jar files into Linux machine since we will need some Linux scripting there.

7. Assume all of these jar files are under your Linux machine now under subdirectory /tmp/myJars

8. Do following scripting(assume you have Java JDK installed so you have jar somewhere under your Linux system; otherwise, install JDK first):

[root@john1 myJars]# for x in `ls`; do jar -tvf $x | grep com.google.common.collect.MapMaker; done

9. Above will find all jar files which contains class MapMaker.

10. Under my case, it found following two jar files:

google-collections-1.0.jar

1479 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$1.class

1756 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$ComputationExceptionReference.class

2041 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedSoftEntry.class

2055 Wed Dec 30 10:59:50 PST 2009 com/google/common/collect/MapMaker$LinkedStrongEntry.class

guava-14.0.1.jar

227 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$1.class

2329 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$ComputingMapAdapter.class

2545 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$NullComputingConcurrentMap.class

4134 Thu Mar 14 19:56:56 PDT 2013 com/google/common/collect/MapMaker$NullConcurrentMap.class

12. Remove google-collections-1.0.jar from your classpath. Now, there is no complain about method MapMaker.keyEquivalence is not found or similar errors.

Tuesday, February 14, 2017

Hadoop Proxy user - Superusers Acting On Behalf Of Other Users

https://hadoop.apache.org/docs/r2.7.2/hadoop-project-dist/hadoop-common/Superusers.html

Wednesday, February 8, 2017

High performance regex open source packages

Java library to check for multiple regexp with a single deterministic automaton. Just a wrapper around dk.brics.automaton:

https://github.com/fulmicoton/multiregexp

RE2/J: linear time regular expression matching in Java:

https://github.com/google/re2j

Monday, February 6, 2017

My first scala from Spark system: word count

[root@john1 john]# cd /var/lib/hadoop-hdfs

[root@john1 hadoop-hdfs]# sudo -u hdfs spark-shell --master yarn --deploy-mode client

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc (master = yarn-client, app id = application_1486166793688_0005).

17/02/06 16:13:53 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.1.0

17/02/06 16:13:54 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

SQL context available as sqlContext.

scala> val logFile = "hdfs://john1.dg:8020/johnz/text/test.txt"

logFile: String = hdfs://john1.dg:8020/johnz/text/test.txt

scala> val file = sc.textFile(logFile)

file: org.apache.spark.rdd.RDD[String] = hdfs://john1.dg:8020/johnz/text/test.txt MapPartitionsRDD[1] at textFile at <console>:29

scala> val counts = file.flatMap(_.split(" ")).map(word => (word,1)).reduceByKey(_ + _)

counts: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:31

scala> counts.collect()

res0: Array[(String, Int)] = Array((executes,1), (is,1), (expressive,1), (real,1), ((clustered),1), ("",1), (apache,1), (computing,1), (fast,,1), (job,1), (environment.,1), (spark,1), (a,1), (in,1), (which,1), (extremely,1), (distributed,1), (time,1), (and,1), (system,1))

[root@john1 hadoop-hdfs]# sudo -u hdfs spark-shell --master yarn --deploy-mode client

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc (master = yarn-client, app id = application_1486166793688_0005).

17/02/06 16:13:53 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.1.0

17/02/06 16:13:54 WARN metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

SQL context available as sqlContext.

scala> val logFile = "hdfs://john1.dg:8020/johnz/text/test.txt"

logFile: String = hdfs://john1.dg:8020/johnz/text/test.txt

scala> val file = sc.textFile(logFile)

file: org.apache.spark.rdd.RDD[String] = hdfs://john1.dg:8020/johnz/text/test.txt MapPartitionsRDD[1] at textFile at <console>:29

scala> val counts = file.flatMap(_.split(" ")).map(word => (word,1)).reduceByKey(_ + _)

counts: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[4] at reduceByKey at <console>:31

scala> counts.collect()

res0: Array[(String, Int)] = Array((executes,1), (is,1), (expressive,1), (real,1), ((clustered),1), ("",1), (apache,1), (computing,1), (fast,,1), (job,1), (environment.,1), (spark,1), (a,1), (in,1), (which,1), (extremely,1), (distributed,1), (time,1), (and,1), (system,1))

Thursday, February 2, 2017

Solve KMS authentication issue under CDH5 HA environment

2017-01-31 21:53:41,538 WARN org.apache.hadoop.security.token.delegation.web.DelegationTokenAuthenticationFilter: Authentication exception: User: testuser@DG.COM is not allowed to impersonate testuser

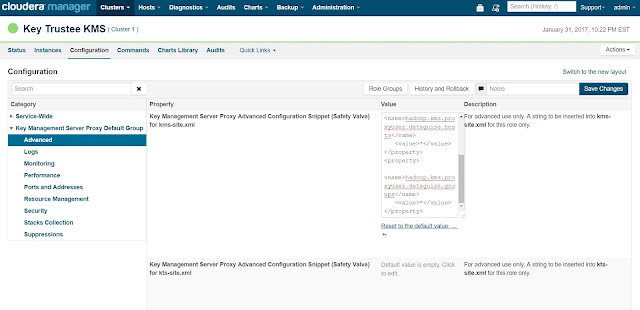

When above log is found in KMS log file(/var/log/kms-keytrustee/kms.log), add below properties in the KMS configuration from the cloudera manager (Cloudera Manager > Key Trustee KMS > Configuration > Key Management Server Proxy Default Group > Advanced) and then we were able to submit the jobs on the cluster using testuser user:-

<property>

<name>hadoop.kms.proxyuser.testuser.users</name>

<value>*</value>

</property>

<property>

<name>hadoop.kms.proxyuser.testuser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.kms.proxyuser.testuser.groups</name>

<value>*</value>

</property>

On CDH5.8.4, the UI is changed and we have to put the content of such xml property into their new UI.

Wednesday, January 18, 2017

Dynamically load jar file in Java

File customJarHome = new File(customJarHomePath);

URLClassLoader classLoader = (URLClassLoader) Thread.currentThread().getContextClassLoader();

addCustomJars(customJarHome, classLoader);

public void addCustomJars(File file, URLClassLoader classLoader) {

File[] jarFiles = file.listFiles();

if (jarFiles != null) {

if (logger.isDebugEnabled()) {

URL[] urls = classLoader.getURLs();

for (URL url : urls) {

logger.debug("URL before custom jars are added:" + url.toString());

}

}

class<?> sysclass = URLClassLoader.class;

Method method = null;

try {

method = sysclass.getDeclaredMethod("addURL",parameters);

} catch (NoSuchMethodException e1) {

logger.error("Unable to find addURL method", e1);

return;

} catch (SecurityException e1) {

logger.error("Unable to get addURL method", e1);

return;

}

method.setAccessible(true);

// add each jar file under such folder

for (File jarFile : jarFiles) {

if (jarFile.isFile() && jarFile.getName().endsWith("jar")) {

try {

method.invoke(classLoader,new Object[]{ jarFile.toURI().toURL() });

logger.info("Add custom jar " + jarFile.toString());

} catch (Exception e) {

logger.error("Failed to add classpath for " + jarFile.getName(), e);;

}

}

}

if (logger.isDebugEnabled()) {

URL[] urls = classLoader.getURLs();

for (URL url : urls) {

logger.debug("URL after custom jars are added:" + url.toString());

}

}

}

}

Subscribe to:

Comments (Atom)